This article will provide you guidelines on how to estimate the saving potentials from fixing bugs earlier in the development process. It explains the rule of ten using simple calculation examples, and comes with recommendations on how to cut your development costs.

The Rule of Ten: Late Software Testing Drives Up Development Costs

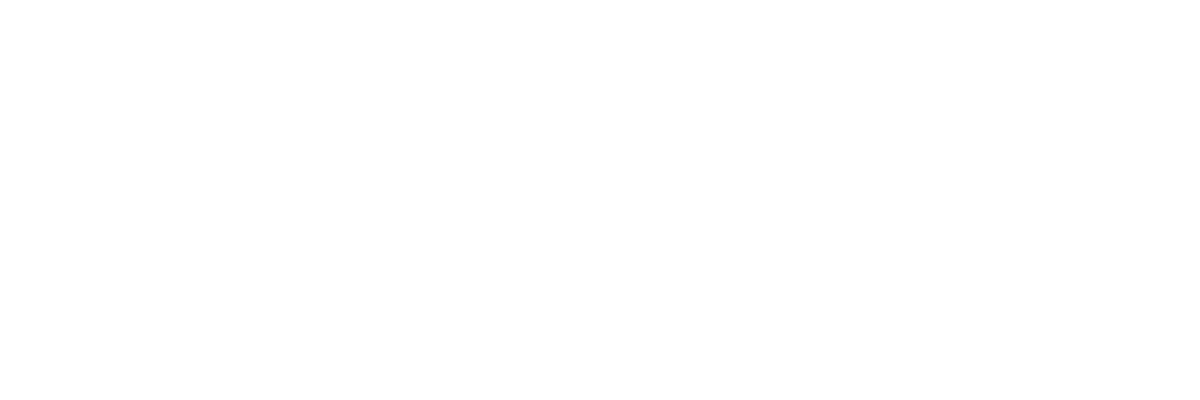

The Rule of Ten states that the further a bug moves undiscovered into the late stages of a development process, the higher the costs of eliminating it.

Finding bugs in earlier stages of the development process saves time and resources

Companies that do not spend the time and money to fix bugs in the early stages of the development process tend to experience downtime, performance issues and security vulnerabilities post-release. Fixing those bugs in production causes stress among the employees and may even result in a loss of customers and revenues, as bugs and security issues may decrease trust in a product or your company. That's why it's considered best practice, to test software as often and as early as possible.

The Rule of Ten is supported by multiple studies from Japan, the USA, and Great Britain, which dealt with the causes of product and quality defects. All these analyses delivered almost the same results: 70% of all product defects were caused by a failure during the stages of planning, design, or preparation.

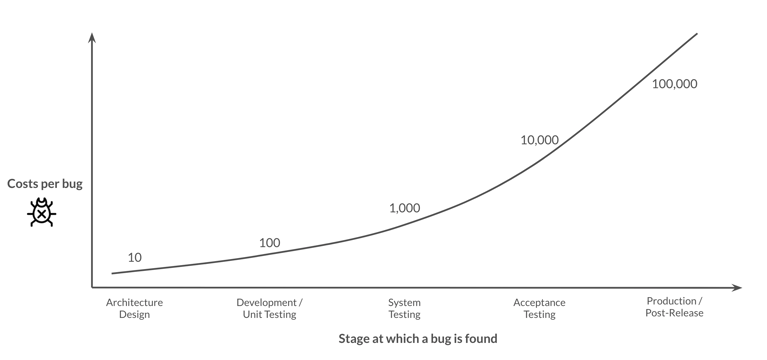

Even though the studies focused on manufacturing processes, the consequences can be found in modern software development as well. If it takes €100 to fix a defect at unit testing, it takes €1,000 at system testing, €10,000 at Acceptance Testing, and €100,000 after release.

The Importance of Bug Detection Rates

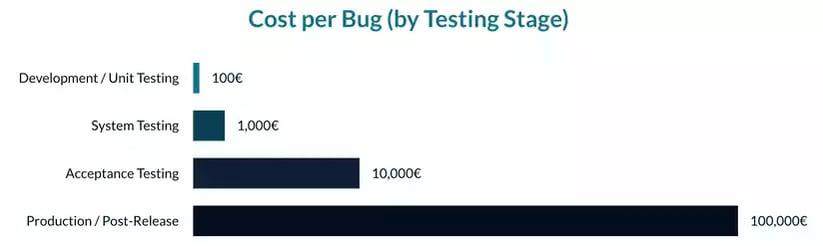

The second part of the Rule of Ten addresses the importance of a high bug detection rate. Let's say that you have a 90% bug detection rate, i.e., after each level, you will have 10% of the remaining bugs in the application. In other words, you will find and correct 90% of bugs in the development stage and have 10% remaining bugs going into system testing.

In system testing, you will find and correct 90% of the remaining bugs, with 10% of those bugs going into acceptance testing. Therefore, after system testing, you should have found and corrected 99% of the total project bugs. In acceptance testing, you should again find and correct 90% of the remaining 1% of bugs. By the time you go into production, you should have 99.9% clean code.

Development costs with a bug detection rate of 90%

However, if your software testing process has a 90% detection rate, then only 0.1% of all bugs will make it into production. But, if your quality assurance program has less than a 90% detection rate, then the drop will drastically increase your development costs. Just dropping the detection rate to 80% will multiply your costs significantly.

Also, the reduction of the detection rate will increase the number of bugs that make it into production, with a corresponding increase in follow-up costs and reputation loss. Instead of a 99.9% clean application, the application will be 99.2% clean with 0.8% of bugs. The only stage where it would be cheaper to fix bugs than in testing with a 90% detection rate, is during the development stage. In the other three downstream levels, the costs skyrocket.

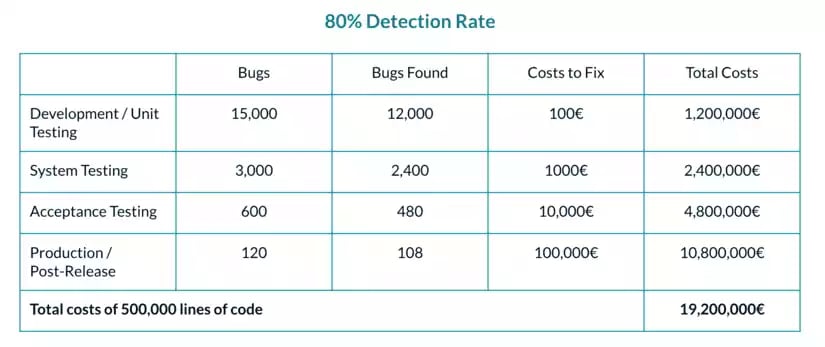

Development costs with a bug detection rate of 80%.

The final costs are more than three times higher than in the previous example.

Where Do the Costs Come From?

Let’s take a typical Professional Services Automation (PSA) and Quality Assurance (Q&A) process, as it still exists in many companies that practice “Agile”, “DevOps” or even DevSecOps. For this example, let’s assume that within a big corporate project, an application is built over the course of two years and is only then handed over to the product security manager (PSM).

1. Manual Efforts

The PSM then defines requirements and initiates a manual testing process. First penetration testing is conducted with OWASP Zap or commercial software. These efforts need to be documented and reviewed which is already time-consuming. Most of the vulnerabilities found during this phase are trivial and could have been avoided if testing was done earlier or automated.

The pentester then has to tune the tooling, evaluate code coverage and results, tune again, rank the vulnerabilities and write reports, before the results can be fed back to the PSM. The PSM then evaluates the results and consults the team to find out if someone has a quick fix at hand. In most cases however, this is a dead end.

2. Understanding Old Code

In the next step, the PSM discusses the bugs together with the pentester and the project manager. Then he passes the vulnerabilities on to the product manager (PM), who after several feedback loops, finally defines which bugs are critical. The PM then assigns them to the different “Agile” teams.

The “Agile” teams then do their best to trace the bugs back to their roots in order to fix them. This is easier said than done, since in most cases, more than a year has passed since the buggy code was written. Before they can actually start fixing the bugs, programmers have to first get an understanding, not just of their own mess, but often also of the mess of their colleagues who have since switched teams or left the company.

3. Consequential Errors

It goes without saying, that this further delays the release. In some cases, simply fixing the bug is not enough, as the bug has caused consequential errors which also require closer inspection. In other cases, changes in the architecture code are necessary, taking up more manual effort and causing further delays.

4. Additional Pentests

During the next step, additional pentests are conducted. If more vulnerabilities are found within these tests, the testing cycle has to be repeated before the application can be approved. Parallel to PSM there is also a Q&A process, but especially in C/C++, similar bugs are found as in pentesting.

Early Testing = Saving Resources

The example above illustrates how resource-consuming late-stage bugs can be. Now imagine if the majority of them, especially the trivial ones, could be prevented during the early stages.

This would minimize the effort required to fix them to a fraction and reduce the number of vulnerabilities making it to the later stages. To achieve this, it is considered best practice to introduce automated testing mechanisms early into the CI/CD pipelines.

The Best Way to Reduce Development Costs:

Invest in Software Testing

So, if you would like to cut your development costs and increase the quality of your released applications, you should definitely take a look at your software testing process. Considering the skyrocketing costs as well as the possible reputation loss caused by buggy releases, you should not hesitate to invest in your quality assurance team and software testing tools.

As described above, it is particularly cost-effective to detect bugs during the development stage. For this reason, your focus should be on supporting your developers with application testing tools that automatically detect bugs during this stage. DevSecOps methods such as static analysis, dynamic analysis, or feedback-based fuzzing are particularly suitable for this purpose.

If you are interested in improving your application quality and reduce your development costs at the same time, automated security testing platforms that allow developers to detect bugs early-on are certainly worth considering.

Try out CI Fuzz, Code Intelligence's security testing platform to find out how automated software security testing can help you develop secure and reliable software.